Britain's headlong slide from silicon supremacy to silicon irrelevance

The forgotten story of how an obscure English engineer very nearly invented the integrated circuit. And what happened next.

Of all the inventions of the twentieth century, one of the most important - certainly the most symbolic - was the transistor.

The solid state switch, first patented by John Bardeen, Walter Brattain, and William Shockley just before Christmas 1947 at Bell Labs in New Jersey, is widely regarded as the foundation stone for the computer age. As Chris Miller documents in his brilliant book, Chip War, semiconductors are these days central to pretty much everything we do - “Most of the world’s GDP is produced with devices that rely on semiconductors” - so that very first transistor represented a seismic turning point.

But one story which is far less often told is that for a brief moment back in the 1940s and ‘50s, it looked just as likely that the silicon chip would be invented not on the east coast of the United States but in England.

Much of the early research into semiconductors - the term technically refers to a whole class of materials which sit somewhere between conductors and insulators - was linked to the invention of radars. Silicon and germanium (that first semiconductor was actually made of germanium) were pinpointed as promising materials for use in radar detectors, and the leading-edge research into radars and semiconductor rectifiers was happening in the UK.

The upshot was that much of that early American work at Bell Labs, MIT and Purdue University was built atop British findings about these strange, exciting materials. If Bardeen, Brattain and Shockley hadn’t invented the transistor when they did it’s very likely it would have been invented by someone in Britain (or for that matter France, where research was similarly advanced). Indeed, it’s said that a transistor was actually made in the UK within a week of the eventual Bell announcement. According to one history of the semiconductor, “it was an idea whose time really had come”.

Even in the 1950s, Britain was still at the vanguard of semiconductor research. The Royal Radar Establishment (RRE) at Malvern was a hotbed of thinking about this new technology, then still mostly at the prototypic stage. Yes, transistors had been invented and they seemed like a smart replacement for the glass vacuum tubes used up until then as switches and amplifiers in primitive computers and radios. But the real breakthrough, the one what would help birth the computer age, was still a way off.

That idea was to incorporate multiple transistors on a single circuit board - an integrated circuit on a slab of semiconductor. The integrated circuit was arguably an even greater breakthrough than the transistor itself, for this was where “silicon chips” as we know them - those pieces of silicon with multiple different circuits on them, those little brains on a piece of solid state material - came into being.

And the conventional story is that the integrated circuit was invented in the late 1950s by Jack Kilby of Texas Instruments and Robert Noyce of Fairchild Semiconductor. Kilby would eventually be awarded the Nobel Prize for the invention; Noyce died before he could get the prize, but not before setting up Intel and becoming incredibly wealthy.

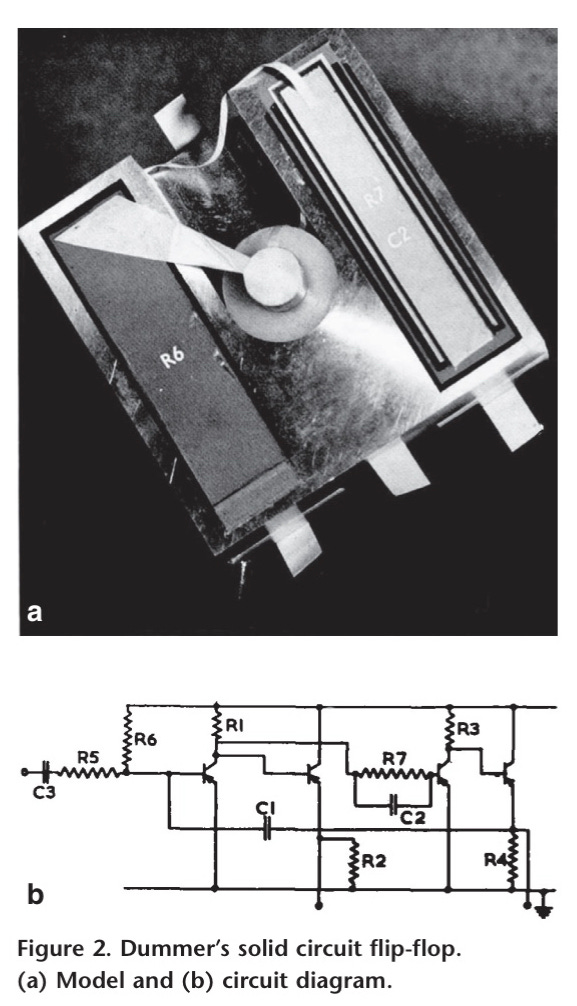

Except that this momentous idea was actually formalised half a decade earlier, not by an American but by an Englishman from Hull called Geoffrey Dummer. Dummer, who worked for the RRE, has been mostly forgotten these days but he came within a whisker of changing history. For, in a speech in Washington in 1952, he laid out the next great leap in semiconductors. When most people were still wondering about how they could use simple transistors, he envisaged a single block of semiconductor substrate consisting of “insulating, conducting, rectifying, and amplifying” sections of transistors with “electrical functions being connected directly by cutting out areas” from the layers. This was an integrated circuit.

It wasn’t just an idea. Dummer got to work trying to make the world’s first integrated circuit. He got tantalisingly close, producing something a few years later which very nearly did that job, a block of silicon with four transistors which he presented at a 1957 RRE event. The problem was that the block wasn’t functional. It was a mock-up. It turns out making a silicon chip was far, far trickier than imagining it.

For years, Dummer and the RRE (along with an electronics company, Plessey), tried to turn slices of silicon crystal into an integrated circuit, but kept running into physical difficulties. There were too many impurities in the materials which meant the semiconductor simply didn’t work. They experimented with a few ways of attaching components to the circuit, using a “thin film” process and then a diffusion process. The years went by but Dummer kept turning out duds.

What they were running into was a lesson which I’ve explored in Material World. These amazing contraptions are not merely manifestations of brainwork and clever design, but of the most advanced material science in the world. Dummer, the RRE and Plessey were running smack bang into the limitations of 1950s physics and chemistry. Indeed, for much of the early years of semiconductors, one of the biggest challenges was not so much a lack of brainpower but our inability to create substances pure enough to turn into semiconductors, where a single atom out of place could ruin a device. That was especially difficult with silicon, which has an extremely high melting point.

Even today, semiconductors are an exercise in alchemy - in humankind’s ability to turn a pretty commonplace material (quartzite that gets blasted out of quarries) into silicon wafers and thence into computer chips which are worth more than their weight in gold. It is a magical universe which is mostly hidden away from our eyes, yet upon which we all depend. One of the chapters I’m most excited about in the book tells that story - one I hadn’t ever read anywhere else - of the journey of a grain of silicon from the quarry where it leaves the earth, all the way until it becomes a chip inside one of your devices.

It is, I believe, the most extraordinary journey in the modern world - and perhaps most interestingly, the majority of that journey happens before the chip even enters the semiconductor fabrication plants in Taiwan and South Korea where they are “made”. The bit we mostly focus on these days is only the tip of the iceberg. In Material World I try to explain what happens beneath the water as well.

Part of the reason the integrated circuit was invented and then mass-produced in the US rather than the UK was, in part, because they got the material part right. Kilby and Noyce, and, perhaps even more importantly someone called Jean Hoerni, who came up with the planar process still used today in chip manufacture, were exploiting material science to birth the semiconductor age.

But for a moment in the 1950s there was a tantalising possibility that the seminal technology of the modern age could have been invented and prototyped in the rolling, green English countryside rather than in California or Texas. As it was, Dummer is today largely forgotten. The RRE was sold into defence company Qinetiq and the site in Malvern was sold off a few years ago - it sounds like it’s being turned into a housing estate though some intrepid explorers took some photos before its demolition a few years back. Plessy was sold to Siemens, which later span out its semiconductor arm as part of Infineon, now one of Europe’s biggest semiconductor firms. Britain’s semiconductor sector has never scaled the heights it once promised.

That brings us to the Integrated Semiconductor Strategy published by the government today. It is a strange, thin document (quite literally: despite having been worked on for years it is barely 40 pages plus summaries). There is no pretence within the document that the UK could compete with the giants of the semiconductor space, companies like Intel or Taiwan’s TSMC. The amount of money promised for the UK sector is piddling in comparison with the sums being thrown around in the US and EU. As someone put it, the total 10 year commitment from the UK is roughly what a company like TSMC invests in capital expenditure every two weeks.

It’s very easy to squint and say: well, things could have been very different, but it’s also worth noting that Britain has tried and failed to enter this race a fair few times before (for more on these efforts, read this excellent primer by Geoffrey Owen). Decades ago, the UK had centres of excellence in certain disciplines (including compound semiconductors, which people are still citing as our last great hope even today). In other words, we’ve been here before.

All of which might lead you to the conclusion that the government should just step back altogether. But it’s also worth noting that none of the world’s chip giants would exist today were it not for government support - sometimes simple grants, sometimes trade protection, sometimes by providing a reliable and big customer (eg the military and the space programme, which helped finance most of the early chip manufacture in the US). Like it or not, this business tends to rely upon government help - at least to kickstart it.

In other words, for all that the UK government is allergic to industrial strategy - the phrase is, I’m told, actually banned in Whitehall - it’s currently faced with a set of issues which it’s hard to resolve without, well, industrial strategy: from hydrogen to batteries to semiconductors to steel.

More to the point, it’s currently faced with a geopolitical environment where everyone else, including Britain’s major allies, is doing wholehearted industrial strategy, meaning it needs to decide whether to do likewise, whether to join forces with its neighbours or allies (Brexit seems not to have helped on this front) or whether to embrace laissez-faire policies and watch most of its technology industries depart these shores.

But the problem with industrial strategy is you invariably end up having to throw money behind ideas and enterprises which sometimes don’t come off. This is politically awkward. You just don’t know which ones are going to fly and which ones won’t.

I have a bit of personal experience of this. Last year I worked on a story about Newport Wafer Fab, Britain’s last remaining big semiconductor manufacturer. One of the companies featured in that story, which seemed like it might have a shot of success, was called Rockley Photonics. There was a lot of hype and excitement surrounding Rockley. It was making an unusual type of semiconductor which, it was rumoured, might end up as a health sensor inside future versions of the Apple Watch, allowing users to check their glucose levels non-invasively.

So I featured Rockley in that article as an example of a British company which might have a chance of making it big. We interviewed the founder for our TV piece. A few months later, almost out of nowhere, it emerged that Apple had ditched Rockley as their photonics provider, instead opting to make these chips with a unit of TSMC instead. Rockley filed for bankruptcy in January.

Might Rockley have had a chance if the government had provided it with more support? It’s hard to know for sure, but that is the perennial problem with industrial strategy. You’re putting taxpayer money at risk. Sometimes those bets pay off, but often they don’t.

Consider a few other examples of success/failure from the sector.

The first is something called the transputer, which for a while in the late 1970s and early ‘80s was seen as the next big thing in semiconductor design. The UK government decided this was the future and it was a future in which it could reasonably compete. If the transputer, a kind of parallel chip, came good, it could turn the country into a chip manufacturing powerhouse. So millions of pounds of public money was poured into a Bristol based company called Inmos, with a manufacturing plant in Newport. As industrial strategy went, it seemed like a smart move.

Round about the same time, in the early 1980s, dutch electronics firm Philips banded together with a company called Advanced Semiconductor Materials International to create a new firm called ASM Lithography (ASML). The idea was to make the big, complex machines used to “burn” transistors onto silicon chips (much more on this in Material World). It was a ballsy move, especially since the market for photolithography, as it’s called, was totally dominated by Japan. ASML’s first years were hard - very hard. The company came close to collapse but was helped out by Philips and by generous support from the Dutch government.

A few years later still in the late 1980s, Morris Chang, a Chinese man who had spent most of his working life in the US, working in some of those earliest semiconductor firms including Texas Instruments, was invited to create a semiconductor company in Taiwan. Back then, most semiconductor firms tended to produce chips they themselves designed, but Chang opted to do something else: to make chips on behalf of other chip designers. Taiwan Semiconductor Manufacturing Company (TSMC) had a difficult birth, but it also had extensive support from the Taiwanese state, who gave it sizeable tax breaks and corralled wealthy Taiwanese investors into providing it capital.

You probably know what happened next, at least with a couple of these examples. TSMC (which, let’s not forget, was founded around a decade after Britain assumed that it shouldn’t enter mainstream chip manufacture because America had it all sown up) is now the world’s biggest semiconductor manufacturer. ASML is today the world’s only manufacturer of the machines which TSMC and others use to make the most advanced logic chips - the ones that go inside today’s smartphones. The critical thing to note, though, is that for a very long time the conventional wisdom was that these businesses were no-hopers. They looked like duds.

The transputer, on the other hand, began with hype but most certainly was a flop. In truth it would probably always have been a flop; Britain chose the wrong technology. This happens (quite a lot, actually).

But the transputer wasn’t helped, either, by the fact that after a Labour government embarked on this semiconductor strategy in the late 1970s, a Conservative government ditched the strategy and much of their support for the sector in the 1980s. Inmos was promptly sold off to a Franco-Italian company and became part of STMicroelectronics. Companies like Plessey and GEC, which were once shoulder to shoulder with their European counterparts, shrank, looked vulnerable and were sold off. Much of the IP went across the Channel to France, Germany and Italy. The main legacy in the UK today is a cluster of clever semiconductor design companies in Bristol and… Newport Wafer Fab.

But on the other side of the UK there was an undisputed success story. Acorn, a Cambridge-based microcomputer company, made some clever computers but struggled to gain a foothold in the sector. However, Apple spotted that the elegant chip architecture - Reduced Instruction Set Computing (RISC) used by Acorn in its computers could be deployed in its Newton Notepad. Out of this came a new company, Advanced RISC Machines or ARM.

Interestingly, ARM’s success is more a product of clever strategy from its directors than industrial strategy by the government. In much the same way as TSMC opted not to make their own chips but manufacture anyone else’s, ARM opted not to make its own chip design but to licence its architecture to other firms. Today ARM architecture is totally dominant in much of the semiconductor world. It is a true global champion.

ARM was taken over in 2016 by SoftBank, a Japanese investment group. In any other country it’s hard to imagine a takeover of such a big national champion - especially one whose architecture is used in security-sensitive devices - being waved through. Indeed, the UK government retains “golden shares” in certain important defence companies, including Rolls Royce and BAE, precisely to prevent such a thing happening. But the takeover came just after the EU referendum. Theresa May cast it as a vote of confidence in the UK and allowed it to go through. Britain’s last remaining semiconductor star was in foreign hands. Today, SoftBank is in the process of re-listing ARM, not in the UK but in the US.

Roll on to today and while ARM still has significant presence in the UK, some wonder how long its British connections will endure. But this company matters, because it is Britain’s only real foothold in the global semiconductor market. Richard Jones, a material scientist who has also documented this sector, believes there is a strong case for the UK government buying ARM, introducing a golden share and then selling it off again.

Such an idea is unlikely to fly with a Conservative government. But the interesting thing about this moment in time is how quickly the old assumptions about what is and isn’t acceptable are shifting. The UK government may not like to use the words, but today’s Semiconductor Strategy document represents the first stirrings in what might end up becoming a genuine 21st century industrial strategy.

It might be a somewhat pathetic, unimaginative beginning. The numbers are tiny, the ambition is weak. There is no mention of energy costs - one of the biggest inputs in semiconductor manufacture and the biggest obstacle in heavy manufacturing in this country. The paper seems to completely miss the fact that Britain, with its sizeable chemicals sector, could plausibly have an opportunity to compete in making some of the chemical inputs used in chip plants around the world. But, hey, it’s a start.

It’s a sign that this government might be ready to begin wrestling with the world as it is today, where countries are battling it out to try to gain supremacy in silicon, and for that matter lithium, copper, hydrogen and the rest. Much money will be spent, much will be wasted, but the outcome of all this may well be a new industrial revolution, not to mention a cleaner and healthier world. Britain might be joining this debate late, but perhaps this is a sign that the mindset is shifting. There may be more such shifts to come.

I hope too that it’s also the beginning of something else: an epiphany that the world we live in today isn’t dematerialising. It’s reliant on very physical, very real products which are really hard to make (as Geoffrey Dummer would have told you). It depends not just on our ability to turn good ideas into clever programs that run on microprocessors, but also our ability to turn raw materials we pull out of the ground into ultra pure silicon wafers, and into the gases and liquids we use to make them.

Material World is an effort to try to tell that magical story, from the bottom up. It won’t help you devise an industrial strategy, for semiconductors or steel or indeed anything else. It won’t help you choose between the Transputers and TSMCs of this world. But it might just help you understand some of the hidden underbelly of the economy we’ve long taken for granted.